Back in early 2017, I put up a “state of my gear” post. My intent was to document the gear I used, so I could come back and see how it changed over time and with changes in my interests, style, and subject matter. A year has now passed, and I’m going to do that dance all over again since things have changed.

My intent is to look more introspectively into the craft in part by looking at how my evolution as a photographer has changed my perspective on gear. Additionally, I’ve found that the gear I use can have a material impact on the way I go about approaching my vision, and I want to see if I can identify trends with that too.

As an example, in reading through my article from a year ago, there’s been more than a few places where conclusions I made back then have been reversed, or at least I’ve gone off in a different direction. Certainly some of the expectations I’ve had for equipment in the past have proven out to be wrong when put into practice.

Finally, like the last time, this isn’t an extensive list of every bolt and nut of camera gear I own. Major things, and things I use a lot are most likely to be covered. However, there is no hard criteria for what does and doesn’t make the list.

Read the rest of the story »

Canon just announced 2 new “updated” 70-200mm pro zoom lenses. The 3rd generation EF 70-200mm f/2.8L IS III USM, and the second generation EF 70-200mm f/4L IS II USM. Of course with any new product release, there’s a lot of talk around the net about these lenses, and what Canon has and hasn’t done with them, and ultimately why Canon is doomed because of what they did or didn’t do.

Anyway, I can’t help but throw my 2 cents into the fray on this discussion. So here it goes.

Read the rest of the story »

5 min read

Many years ago, when I was first getting started with photography, Sports Shooter posted a set of portfolio review videos. One of these reviews stuck out in my mind. A student’s portfolio had 2 images of a baseball pitch in flight with the ball in focus and the pitcher, catcher, batter and so forth out of focus.

Several of the reviewer’s commented on the inclusion of two of these images in this person’s portfolio. He showed he could make that kind of image, repeating it didn’t add any value to the portfolio, and generally wasted precious space.

Portfolios are showcases for an artist. They should show off the individuals best work and their range as an artist. Repeating similar images doesn’t work towards that goal, it just pads out space.

You could almost go as far as arguing that it wastes the time of the reviewer, since they’re just looking at an additional version of the same image.

Read the rest of the story »

10 min read

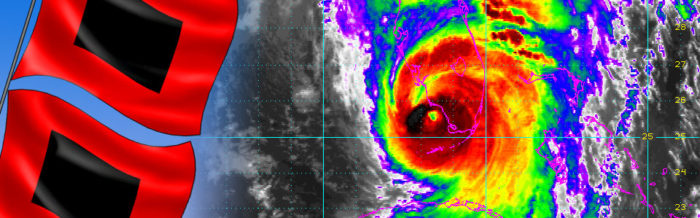

Update 2: Monday 2017-09-18

This is really not the year to be a Floridian, and an even worse year to live in the eastern Caribbean. First, we got smashed by hurricane Irma, which while I don’t want to downplay the impacts of that storm, certainly could have been a lot worse. If it hadn’t had been for the interactions with Cuba (which of course was bad for them) and the dry air that caused the back end of the storm to fall apart, it almost certainly would have been a lot worse.

Right on the coattails of Irma was Jose, which too rapidly intensified into a major hurricane, then just barely squeaked by the already decimated Leeward Islands that Irma just finished with. Thankfully, for everyone in the Bahama’s, and the Southeastern US coast, Jose turned out to sea and has largely not been a huge factor so far. And while Jose is forecast to make a close approach to New England, and potentially loop around in that area, it’s now moving over cooler waters that will seriously hamper it’s ability to continue to maintain its intensity.

Read the rest of the story »

Nikon finally announced their latest high-end/pro-level DSLR, the D850. Honestly, there’s a lot to talk about with it both as an upgrade over the old D810, as part of Nikon’s current lineup of cameras, and with respect to Nikon’s future prospects in the industry.

Recent times have not been good to Nikon. Camera sales have been down and of the big the camera makers, Nikon is arguably the most vulnerable to declines in camera sales. Moreover, Sony has been aggressively trying to move into the market space, and most of their gains have been coming at Nikon’s expense.

Compounding matters, Nikon’s D800 series cameras can certainly be perceived as being extremely long in the tooth at this point.

Nikon’s first real attempt to get things moving again, so to speak, was the D5 and D500. Cameras that very much were both simultaneously impressive and disappointing.

In many ways, the D5, and to a very much lesser extent the D500, looked to me like a panicked response by terrified management that saw market share eroding and could only think of how to inflate numbers to make something that looked, in their eyes at least, competitive.

Fortunately for Nikon users, the initial flailing response that the D5 and D500 seemed to embody seems to have past. Nikon seems to have remembered what matters; not putting bigger and bigger numbers on a box to delude gullible photographers into buying the latest thing, but providing a solid tool that photographers can use to produce the images that want to make.

Looking at the announced specs, and some very preliminary performance estimates that have surfaced, the D850 looks to be exactly the camera Nikon needs right now. So lets dive in to looking at some of the announced specs, and what some preliminary testing implies.

Read the rest of the story »

When push comes to shove, I have no idea what’s going on in the minds of the management in Canon’s imaging division. A New Zealand photographer, Rob Dickinson, got an early sample 6D mark II from Canon New Zealand, at least as I understand it, and he’s posed some early images from it. Images from which some people in various communities, most notably Fred Miranda, have started looking at them to determine the dynamic range of the camera.

On one hand, I do feel it’s important to point out that the images that all this discussion is based on are from a “pre release camera.”

That said, the 6D mark II is supposed to be released on the 27th of this month. That’s less than 3 weeks away. Right now, Canon is building up inventory to ship so that there are more than a camera to have on shelves on release day. The hardware is all final. There can’t and won’t be any tweaking to the sensor or any other hardware between now and the 27th.

In fact, the only thing that Canon could tweak is the firmware, and even that would almost certainly require a post launch user applied update.

In short, my expectation is that the retail cameras will reflect the performance that’s being seen in the sample images from this particularly pre-release sample.

Read the rest of the story »

The last time I wrote about a new camera I wasn’t planning on getting was when Nikon announced the D5 and D500 back in Mach of 2016: more than a year ago now. Since then, mum’s been the word, mostly because I just don’t find cameras to be all that interesting to talk about anymore.

In fact, I generally find the commentary that comes with a new camera to be obnoxious to the extreme. Still photographers are shouted over by people whining about 4K video and frame rates in cameras that are still principally, if by ergonomics alone, still cameras. Moreover, there’s generally so much noise about stats, often being represented incompletely or inaccurately, as if the performance of the camera’s sensor was the primary thing that made a picture good.

Or maybe I’m just getting prematurely old about all of this.

One point that’s been repeatedly driven home to me over the last 3-4 years is that the sensor doesn’t matter nearly as much as the person behind the camera. A great sensor will never make a mediocre picture better, and a comparatively poor quality sensor will never made a emotionally resonant picture fail. The difference between the widest dynamic range in the best medium format camera and the poorest APS-C sensor pales in comparison to what the person using it puts it to.

Instead of caring so much about the sensor itself, I’m increasingly becoming convinced it’s the ancillary and supporting features that make the most difference. Things like GPS, autofocus, metering, frame rate, and most importantly the user interface—the things that make it easier to convert your vision into an image—that matter the most.

Read the rest of the story »

In my previous article on the US full frame cameras market size, I mentioned that it might be interesting to see if the strong fall signal was a product of the US commercialization of Christmas and how strong of a market signal it was. Since I had some of the data already cooking, I went back to the CIPA shipment statistics to see what the other regions looked like.

The short of it, is that I’m also entirely certain that the big uptick in shipments in the Americas in October, was driven by the United States’ Christmas retail behavior. The same with the big down turn in shipments in December, January, and February; post holiday cool-off.

Interestingly, the “other” region had a similar signal, though I don’t know what countries “other” covers.

One final note, as alluded to in the title, these charts are based only on interchangeable lens camera (ILC) shipments, from the Japanese camera makers that report shipments to the CIPA. Fixed lens cameras, so point and shoots, are not included.

Read the rest of the story »

Back in the beginning of April (2017) Sony USA put out a press release announcing that for the months of January and February they had taken the number 2 spot for full frame camera sales in the US. Needless to say, the photography press (and photo blog-o-sphere) ran with this, with many seemingly citing it as evidence to support a narrative that Canon and Nikon are clueless and Sony isn’t.

On a related note, I also recently wrote about the lack of data and problems that causes when it comes to talking about camera gear, sales, and features. One of the biggest of those problems is that without clear data and context, it becomes hard if not impossible to identify outlying events, and, more broadly, outliers can be made to fit pretty much any narrative an author chooses.

This article is the result of well, lets be honest, Sony’s press release raised some questions for me and I started digging into whatever information I could find as best and accurately as I could; which raised even more. None of which I think I really answer completely, but then that really wasn’t the point of this kind of exercise for me anyway.

I don’t have any vested interest in any particularly result, though many certainly do. However, as I said in my previous post on bias, without any kind of data and contextualization it’s hard to make sound judgments. While I don’t have tons of hard data, I do want to look what data I can find, and other market factors that may have been at play.

Moreover, the media and blog-o-sphere’s response to this struck a cord me with me. Sites like DP Review (though I’m certainly not singling them out) have far more in the way of resources to talk about and add context to a press release this, at least compared to someone like me. I don’t have a budget for review gear, sophisticated test benches, and most certainly I don’t have the readership and pull to be able to go to a camera manufacture and ask for clarification on press releases that aren’t clear to me. But this kind of research doesn’t get done, because it’s not as shiny and profitable as posting a fluffed up article about the newest camera that’s been announced; so it doesn’t really get done.

So let met preface this article with this. There is a lot of speculation in this article. Most of this speculation is based on hard data, but not always the data I’d like it to be. I do talk about my assumptions and where I get things from. All told I’m probably going to raise more questions than I can answer, but ultimately this is intended to be food for thought, perspective if you will, not a rebuttal or refutation of something.

Read the rest of the story »

You’ll have to forgive me, I have a bit of a rant here with on the level of discussion on the Internet around cameras. Don’t get me wrong, I like talking about cameras and other camera gear, and even to some extent the apparent strategies of various manufactures. Heck, I’ve even written a series of posts advancing ideas that I’d like to see implemented by camera makers. In fact, it’s probably safe to say that a good percentage of us photographers like engaging in these kinds of discussions.

At the same time, I find myself continually annoyed by the level of discourse on these kinds of topics. A large part of that,I believe, stems from the near complete lack of good data and information to work in these kinds of discussions. As a result, it’s very easy to make claims that are both unsupported and subject to significant levels of bias.

It’s not just commenters on various discussion forum who are “guilty”, if you can even call it that, of not being able to discuss things effectively. Many authors of articles published by the photography press have fallen in to the same traps of not having enough data to properly contextualize things they’re talking about — or worse just going for outright sensationalism like so much of the rest of the media.

Certainly there is data out there on things like the demand for features and actual market share rates at a segment level. However, it’s almost always not publicly available, and in many cases almost certainly proprietary and so will never become publicly available.

Read the rest of the story »